The Invisible Frontier of Digital Risk

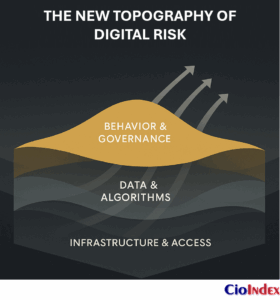

Every transformation expands capability — and with it, exposure. The more an organization connects, automates, and integrates, the less visible its boundaries become. Risk no longer enters from the outside; it emerges from within — the byproduct of acceleration itself.

Digital transformation was meant to simplify complexity. Instead, it has redefined it. Systems once separated by function now operate as living networks of code, data, and decision logic. Their interactions are instantaneous, their consequences exponential. Each new API, cloud instance, or machine-learning model adds not only utility but unpredictability — a new surface of dependency that governance must somehow see and understand.

This is the new topography of digital risk: not a perimeter to defend, but an ecosystem to interpret.

The Shift from External Threat to Internal Entropy

Traditional risk management assumed danger lived beyond the firewall — cyber intrusions, data breaches, or compliance failures. That logic no longer holds. As enterprises digitize, risk becomes endogenous — generated by the same velocity that fuels innovation.

Every integration is a decision about trust. Every automation inherits the assumptions of its designer. Every dataset carries the biases of its origin. The more digital the enterprise becomes, the more risk it creates through its own acceleration.

The enterprise is no longer a fortress defending against the outside world; it is a living organism continually regenerating its own vulnerabilities. The very systems built to enhance intelligence can now obscure it — algorithms so fast and opaque that their decisions cannot be fully traced or explained.

The result is digital entropy: the natural tendency of complex systems to drift toward disorder unless continuously interpreted and renewed. Every enterprise emits entropy the way stars emit heat — energy expended to keep order from collapsing.

The Economics of Entropy

In the industrial era, risk was managed through redundancy — insurance, compliance, and contingency planning. In the digital era, redundancy becomes fragility. Every layer of process or technology added for safety introduces new interfaces to maintain, new data flows to secure, and new dependencies to govern.

The cost of stability now compounds. Organizations expend increasing energy maintaining coherence — reconciling systems that evolve faster than the structures meant to oversee them. This hidden expenditure defines the Entropy Economy — the cost of sustaining clarity in environments that change faster than comprehension.

As interpretive effort replaces productive effort, talent energy shifts from creation to correction — a silent drain on performance. When governance cannot keep pace, the system defaults to inertia. Decisions become reactive, oversight lags behind implementation, and risk migrates silently across the enterprise, invisible until it converges.

Governance Lag — The New Vulnerability

Every transformation introduces a time gap between capability and comprehension. New platforms go live before oversight frameworks mature; data proliferates before its lineage is documented; algorithms make decisions before accountability is assigned. These are not technical flaws — they are temporal ones.

Governance lag has become the most underestimated vulnerability of modern enterprises. It is the delay between what the system can do and what leadership understands it to be doing. The longer the lag, the faster risk compounds — not linearly, but exponentially.

This is why even technologically mature organizations face unexpected crises: biased algorithms, privacy violations, or shadow systems accumulating unnoticed in the cloud. They are not lapses of control but lapses of visibility. The problem is not the absence of governance, but its obsolescence.

From Compliance to Consciousness

The governance systems inherited from the industrial age were designed to regulate static processes. They measure conformity, not learning; they audit outcomes, not behavior. In the digital environment, compliance without consciousness creates false security. Dashboards glow green even as systems diverge from their original intent.

The challenge is not to strengthen control but to deepen comprehension. Governance must evolve into a feedback architecture — one that measures how systems learn, not just what they report. Oversight should sense deviation before it escalates, interpreting risk as information rather than interruption.

Digital transformation, in this sense, demands governance by learning. Systems must be designed to reveal their own behavior, not conceal it behind metrics. Properly governed, risk becomes intelligence — the feedback loop through which the enterprise perceives itself.

The Reframing of Risk

In the analog world, risk was an event. In the digital world, it is a condition. It no longer arrives as disruption from without; it accumulates as complexity from within. The modern enterprise must therefore treat risk not as a problem to solve but as a pattern to read.

To read that pattern requires new forms of intelligence — technical, ethical, and organizational. It demands transparency as architecture, not aspiration. And it calls for leadership that interprets risk not as failure but as feedback — evidence of where systems learn faster than institutions can.

The invisible frontier of digital risk is not defined by threat but by understanding. Every transformation brings the enterprise closer to that boundary: the moment when control gives way to cognition. The organizations that cross it will not be those that harden themselves, but those that learn to perceive themselves.

Technology amplifies possibility; risk amplifies meaning. The more intelligent a system becomes, the more deeply it must understand its own uncertainty.

Shadow IT — Innovation in the Dark

Every transformation begins with the promise of empowerment. Cloud platforms, low-code tools, and self-service analytics democratize capability across the enterprise. Yet empowerment without alignment produces its own paradox: innovation that flourishes in the shadows.

Shadow IT was once a nuisance — a spreadsheet macro here, a rogue database there. Today it is an invisible parallel architecture. The same decentralization that fuels agility has fragmented accountability. Departments deploy cloud tools and automations beyond enterprise sight. What began as creativity has become unintended complexity — the quiet multiplication of systems without design coherence.

The Anatomy of an Invisible System

Shadow IT is not a technical defect but a structural symptom — evidence that oversight has failed to scale with curiosity. It emerges wherever the impulse to solve problems locally outpaces the organization’s capacity to support them centrally.

Modern enterprises operate like federations: dozens of business units, each equipped with the means to build, integrate, and deploy. The proliferation of SaaS applications, microservices, and API marketplaces enables near-instant capability but diffuses control. Every unregistered workflow or unsanctioned integration expands the perimeter of exposure.

Analysts estimate that between 30 and 40 percent of IT spend now occurs outside formal control. This is not rebellion; it is inertia — the momentum of autonomy unconstrained by architecture. Shadow IT is governance lag in miniature: the same temporal gap between capability and comprehension replayed inside every business unit.

The Psychology of Autonomy

Shadow IT thrives where oversight is perceived as friction. Employees rarely act out of defiance; they act out of urgency. When the official path is slow, they improvise. When approval cycles lag behind opportunity, experimentation migrates underground.

Each shadow solution represents an intent to improve the system from within — an act of creative defiance born from constraint. But intent does not immunize impact. What begins as empowerment often matures into exposure: duplicated data, unsynchronized logic, and fragmented user experience. The enterprise grows faster than it can see itself.

This dynamic reveals a deeper cultural imbalance — a misunderstanding of governance itself. People turn to unsanctioned tools not because they reject discipline but because they cannot feel its purpose. Where oversight constrains rather than coordinates, improvisation becomes policy. Leaders must make governance feel like guidance, not gatekeeping.

The Economics of Fragmentation

Every ungoverned system carries a compounding cost. Duplicated functionality inflates subscription fees; inconsistent data inflates decision risk; unmanaged integrations inflate security overhead. The invisible tax on transformation is not the cost of technology itself but the price of reconciling what was never designed to connect.

Over time, this fragmentation distorts financial visibility. Budgets blur between departments, usage metrics diverge, and economies of scale dissolve. The result is an illusion of progress — rapid deployment masking declining efficiency.

Shadow IT converts innovation energy into maintenance energy. Talent that could advance digital capability is instead absorbed by reconciliation — aligning formats, merging APIs, correcting discrepancies. What begins as localized experimentation aggregates into systemic drag, quietly inflating the enterprise’s cost of coherence.

Governance Lag in Miniature

At its core, Shadow IT embodies the same condition described in the Entropy Economy — the cost of maintaining coherence as systems multiply. It is the operational echo of governance lag: the delay between where capability exists and where accountability resides.

The organization can do more than it can safely comprehend. Each unmanaged instance becomes a local optimization that undermines systemic design. The more success these local systems achieve, the harder they are to retire. Executives become dependent on tools they never approved, data they cannot trace, and processes they cannot audit.

Shadow IT thus evolves from a short-term fix into a structural dependency — a hidden layer of critical infrastructure operating without visibility.

From Prohibition to Integration

Traditional responses — bans, crackdowns, audits — treat Shadow IT as misconduct. In reality, it is a design problem. Eradication is neither possible nor productive; every attempt to suppress it generates new workarounds. The path forward is not prohibition but integration.

Integration begins with acknowledgment: mapping the shadow before judging it. Inventory every external subscription, data flow, and automation pipeline. Translate the ecosystem of improvisation into a system of record. What was hidden becomes visible; what was unmanaged becomes measurable.

Next comes federation. Oversight must operate like a network — central principles with distributed enforcement. Instead of dictating tools, define protocols. Instead of demanding approval, enable transparency. When experimentation is visible, it becomes governable.

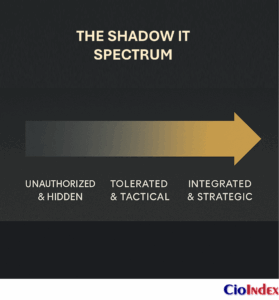

The Shadow IT Equation:

Autonomy – Oversight = Exposure

Autonomy + Integration = Intelligence

Federated governance transforms Shadow IT from liability into learning — a discovery engine for unmet needs and process gaps. Each rogue system reveals where bureaucracy exceeds design capacity. Studied correctly, the shadows illuminate the system itself.

Cultural Reframing

Absorbing Shadow IT requires more than policy; it requires empathy. The same creativity that drives unsanctioned innovation can be harnessed to drive institutional renewal. Organizations should cultivate safe autonomy — clear guardrails paired with rapid pathways to legitimize useful experiments.

Innovation offices, internal marketplaces, or “innovation sandboxes” formalize the grey zone between compliance and creativity. Here, experimentation is visible by design — logged, reviewed, and potentially scaled. This reframing signals that oversight is not surveillance but collaboration.

When people understand that transparency protects possibility, not punishes it, they bring their ingenuity back into the light.

The Path to Coherence

The goal of transformation is not control but coherence — the ability of distributed systems and minds to act with shared intent. Shadow IT reveals where coherence has fractured. Integrating it is less about removing risk than reclaiming alignment.

A mature enterprise does not seek to eliminate its shadows; it seeks to illuminate them continuously. Visibility is not a constraint on freedom but its precondition. When governance learns to scale with creativity, the organization ceases to oscillate between order and chaos. It begins to think as one system.

Innovation moves fastest in the dark — until the enterprise learns how to see.

What distinguishes digital maturity is not the absence of shadow, but the presence of light that can reach it.

Data Drift and the Ethics of Automation

Every organization now lives within an ocean of data — fluid, immense, and constantly in motion. What once described the business now defines it. But in this abundance lies a paradox: the more information an enterprise collects, the less certain it becomes of what that information truly means.

Digital transformation has made data omnipresent but not infallible. As systems learn from experience, they also inherit distortion. Algorithms trained on yesterday’s patterns make tomorrow’s decisions. Over time, the dataset begins to drift — quietly diverging from the reality it was meant to represent. This is not a failure of technology; it is the entropy of meaning.

From Information to Interpretation

Every dataset is a frozen assumption about how the world works — a record of what someone once believed to be important. Each carries the imprint of context: who collected it, what they measured, and what they ignored. When that context is stripped away and fed into automation, data becomes unanchored from interpretation.

The velocity of automation accelerates this drift. Machine-learning models evolve in cycles of milliseconds; the humans who govern them move in cycles of quarters. Between those speeds, comprehension collapses. The algorithm’s world becomes self-referential — learning from its own projections rather than lived reality.

This is data drift: when the informational fabric of a system moves faster than the frameworks meant to understand it. Once drift begins, every decision derived from it amplifies the deviation. Like a compass slightly misaligned, the error grows invisible until it redefines direction itself.

Data, like light, illuminates only what its source allows it to see.

Algorithmic Blindness

Automation amplifies not just data but the assumptions embedded within it. A model is only as objective as the lens that shaped its inputs. When bias exists in data, automation doesn’t remove it — it industrializes it.

This creates algorithmic blindness: the inability of a system to see beyond the boundaries of its own training. Left ungoverned, it can reproduce inequity at digital scale — approving credit unevenly, prioritizing resources unfairly, or predicting outcomes through the lens of past prejudice.

Ethical failure in automation rarely begins with intent; it begins with omission. The dataset is incomplete, the context misunderstood, or the feedback loop broken. Once encoded, those omissions acquire permanence. The organization continues to “learn,” but what it learns is the repetition of its own bias.

Ethical Debt — The Compounding Cost of Omission

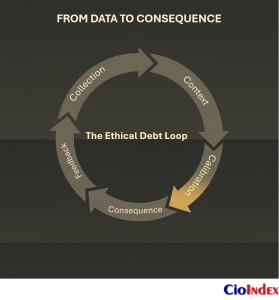

Every unexamined dataset, every opaque model, and every deferred audit accumulates ethical debt — the moral and operational liability created when automation moves faster than accountability.

Like technical debt, ethical debt compounds silently. Its interest is paid in trust — by customers, regulators, and employees who no longer believe the system is fair or intelligible.

Ethical debt accrues in three layers:

- Pre-model distortion — bias in collection and labeling.

- In-model design bias — assumptions embedded in structure and parameters.

- Post-model reinforcement — feedback loops that amplify early errors.

When oversight neglects any layer, ethical drift accelerates. The gap between what the system optimizes for and what the organization values widens.

In the Entropy Economy, this is the most dangerous inefficiency — not wasted capital but wasted credibility. Trust, once lost, has no residual value.

Governance in the Age of Learning Systems

Traditional oversight frameworks were built for static systems. They certify compliance, not cognition. But automated systems evolve through feedback; every decision reshapes the data that trains the next. Oversight must therefore evolve from auditing outputs to understanding learning behavior.

Effective governance in learning systems operates across three simultaneous layers:

- Data provenance — tracing origin, context, and consent.

- Model explainability — ensuring decision logic remains interpretable.

- Outcome accountability — mapping consequences back to intent.

These layers form an ethical circuit. When the circuit remains intact, learning and accountability stay aligned. Break it, and oversight collapses into compliance — ticking boxes while algorithms rewrite their own rules.

Mature enterprises treat explainability not as an afterthought but as infrastructure. Transparency becomes the architecture of trust. If a decision cannot be explained, it cannot be owned; if it cannot be owned, it cannot be ethical.

When oversight of learning systems reaches the boardroom, ethics becomes governance, not commentary.

The Economics of Meaning

Data drift is not only a moral hazard but an economic one. When analytics diverge from truth, strategy diverges from value. Organizations begin optimizing for the illusion of accuracy — improving metrics that no longer measure reality.

Every misinformed algorithm multiplies inefficiency downstream: wasted marketing, mispriced risk, mistargeted investment. The cost of correction grows with delay. Research shows that enterprises lacking active data-governance discipline spend up to 40 percent more on model maintenance than those that monitor continuously — the price of unexamined learning.

Ethical clarity preserves return velocity. The clearer the decision lineage, the faster insight compounds — turning transparency into performance.

Ethical governance, therefore, is not restraint on innovation but its multiplier. When models are transparent, they learn faster from error. When data lineage is visible, decisions strengthen through reuse. In the long run, clarity outperforms speed.

From Bias to Balance

Automation has made organizations extraordinarily capable — and proportionally vulnerable. Machines now make decisions at a scale no human process can match. The question is not whether they will err, but how quickly they can recover understanding when they do.

The goal of governance is not perfection but balance — ensuring that the rate of learning exceeds the rate of drift. Ethical intelligence, the ability to detect and correct distortion, becomes a competitive advantage.

Leaders must recognize that fairness and efficiency are not opposing goals but interdependent ones. Ethical clarity reduces rework, minimizes regulatory exposure, and protects the organization’s most fragile asset: belief in its integrity.

Toward Ethical Resilience

As automation expands, the boundary between operational risk and moral responsibility dissolves. Ethics becomes an engineering discipline — a form of system hygiene that prevents small distortions from becoming structural fractures.

This is ethical resilience: the capacity of a system to preserve integrity while learning at speed. It demands continuous calibration, not occasional review. Enterprises must treat data and algorithms as living participants in governance — monitored with the same rigor applied to finance or security.

Some organizations now institutionalize algorithmic audit boards — cross-disciplinary councils of technologists, ethicists, and executives examining bias and transparency at the same cadence as financial reporting. Others embed “ethical checkpoints” into model lifecycles, pausing deployment until traceability is proven.

These mechanisms mark the shift from reactive ethics to designed ethics — from compliance to conscience.

The measure of intelligence is not automation, but awareness.

When systems can explain themselves, they cease to merely decide — they begin to understand.

The Human Factor — Cognitive, Cultural, and Decision Bias

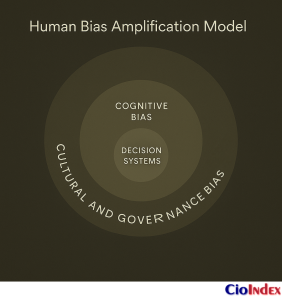

Every system reflects its designers. Behind every algorithm lies a human assumption, and behind every assumption, a belief about how the world should work. Technology automates decisions but does not liberate them from human influence. It merely scales it.

Digital transformation often aspires to remove human error. In practice, it automates it. Biases once contained within departments or leadership teams are now embedded in data models, decision engines, and policy workflows — magnified by speed and scale. The more intelligent systems become, the more invisible their inherited logic grows.

The biases encoded in data are reflections of those who created it — human distortion rendered in digital form. The next frontier of risk, therefore, is not algorithmic but cognitive.

Automation Complacency

Humans are wired to trust systems that appear precise. The dashboard glows green; the model produces a score; the alert clears. Authority transfers quietly from judgment to interface.

This phenomenon — automation complacency — arises when decision-makers substitute critical thinking for procedural certainty. Once a model is validated, its output becomes ritual. What began as assistance becomes abdication.

The psychology behind this is evolutionary. Cognitive effort is costly; delegation is efficient. The more complex the environment, the greater the temptation to defer to automated certainty. But when people stop questioning, systems stop learning. Errors accumulate silently — not through malfunction but through misplaced faith.

Trust itself is not the problem; unexamined trust is. A healthy digital culture resists this comfort. It teaches leaders to interpret systems, not obey them. It rewards discernment over deference.

Cognitive Bias — The Mirror of Certainty

Every human decision contains distortion. We filter information through emotion, experience, and expectation — compressing ambiguity into patterns that feel familiar. In the digital context, these distortions become encoded as defaults.

Confirmation bias reinforces legacy assumptions: we trust outputs that validate our views and ignore those that don’t.

Anchoring bias skews design decisions toward the first data point seen.

Availability bias favors the measurable over the meaningful.

When these tendencies shape data labeling, model parameters, or evaluation metrics, bias becomes infrastructure. Systems begin predicting not what is true, but what is expected.

Cognitive bias is not a failure of intellect but of awareness. The antidote is not precision, but perspective — the discipline of questioning how one knows what one believes.

Cultural Bias — The Invisible Architecture of Agreement

If cognitive bias distorts individual perception, cultural bias distorts collective perception. Every organization carries a set of shared assumptions — what it rewards, what it fears, what it defines as success. These norms silently shape how technology is designed, deployed, and interpreted.

A culture that prizes speed over reflection will prioritize automation even when understanding lags. A culture that rewards certainty will suppress experimentation. Over time, the cultural logic of the enterprise becomes embedded in code — an invisible architecture of agreement.

This is why identical technologies behave differently across organizations. The same AI tool that accelerates innovation in one setting may entrench hierarchy in another. Culture determines whether technology is used to explore or to enforce.

To detect cultural bias, organizations must examine their rituals: how meetings are run, how dissent is handled, how mistakes are treated. These small moments reveal the true boundaries of learning. Technology does not create culture; it reveals it.

Bias is the lens through which light bends — invisible, yet shaping every pattern we see.

Governance Bias — The Slow Reflex

Oversight, too, is susceptible to its own distortion — the bias of delay. Governance structures built for predictability reflexively slow what they cannot fully grasp. Committees favor certainty over experimentation, control over comprehension.

This governance bias creates organizational friction precisely where agility is most needed. It manifests as excessive validation cycles, redundant approvals, and risk avoidance disguised as prudence. The result is institutional hesitation: a system capable of acting but afraid to move.

Modern oversight must replace suspicion with sensing — moving from control to curiosity. The goal is not to eliminate regulation but to accelerate awareness. Continuous review, transparent data lineage, and feedback loops transform governance from gatekeeper to guide.

When governance becomes reflective rather than reactive, bias turns into learning.

The Bias Amplification Effect

Digital systems do not invent bias; they compound it. When cognitive, cultural, and governance distortions interact, they form bias amplification loops — small preferences that escalate into structural patterns.

For example:

- A cultural bias for consensus discourages dissent.

- Cognitive bias reinforces decisions that confirm the consensus.

- Governance bias protects the established model from challenge.

Over time, the loop produces a closed epistemic system — an organization that believes its own data because it no longer knows how to disprove it. Innovation stalls not for lack of technology but for lack of curiosity.

Bias amplification is the cultural equivalent of data drift. Both occur when learning ceases to question its own logic. Bias erodes efficiency as surely as error — decisions built on distortion waste both trust and capital.

Designing for Human-in-the-Loop Intelligence

The antidote to bias is not automation offloading but cognitive integration — designing systems that extend human judgment rather than replace it.

Human-in-the-loop intelligence functions as a dynamic partnership: machine speed guided by human sense-making. This requires new forms of literacy — the ability to read algorithms, interpret confidence intervals, and challenge model assumptions without dismantling their utility.

Leaders must cultivate interpretive competence — the skill of asking questions machines cannot. “What does this pattern mean?” “What is it missing?” “Who is accountable if it’s wrong?”

When interpretive competence reaches executive decision forums, human judgment becomes a governed asset rather than an unmeasured risk. In mature organizations, this competence becomes part of governance architecture itself. Oversight meetings assess not only metrics but meaning.

The quality of governance is measured by how well it questions the logic of its own systems.

The Ethics of Perception

Every bias is a story about perception — about how humans assign meaning under uncertainty. Ethical design begins with acknowledging that no dataset is neutral and no model is omniscient. Fairness is not a technical property but a moral discipline: the willingness to see from another perspective.

Organizations that cultivate empathy — in design, testing, and leadership — develop resilience against bias. Empathy is not sentiment; it is situational awareness. It allows teams to anticipate how systems will behave when context changes, and to adjust before harm occurs.

As automation grows more sophisticated, ethics must become more human, not less.

The strength of intelligence lies not in what it knows, but in how it questions what it knows. Awareness, not automation, is the highest form of precision.

The Expanding Perimeter — Risk in the Age of Distributed Intelligence

Risk has escaped containment.

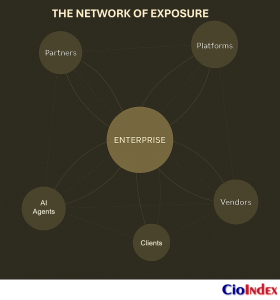

Once, an enterprise could draw its boundaries — networks within firewalls, data within databases, people within offices. Today those boundaries are porous by design. Cloud platforms, partner ecosystems, and AI agents form a web of intelligent interdependencies that no longer fits within traditional definitions of control.

The organization no longer operates as a singular entity but as a distributed organism — a network of human and machine intelligence that learns, decides, and adapts beyond any one perimeter. Risk now travels through trust.

From Perimeter to Network

The traditional perimeter was physical, then digital, now conceptual. Earlier, defense meant separation: building barriers, segmenting data, and hardening systems. Transformation inverted that logic. The modern enterprise competes through connection. Agility depends on access, and value creation occurs in the spaces between entities — APIs, data exchanges, shared algorithms, federated clouds.

Each of these connections expands the surface area of exposure.

Each new integration is both capability and conduit.

Distributed architectures enable scale, but they also dissolve ownership. When a process spans five vendors, three clouds, and one AI model, accountability becomes a shared variable — diluted across ecosystems that rarely fail in isolation.

Complexity does not distribute risk evenly; it multiplies it geometrically.

The Interdependence Dilemma

Digital ecosystems are interdependent by nature. This interdependence creates a paradox: the stronger the connection, the greater the vulnerability.

A software update from one supplier cascades across dozens of systems. A data misclassification in a partner environment propagates through shared analytics pipelines. A compromised AI model used by multiple organizations replicates error at scale.

When a major cloud provider experiences an outage, hundreds of enterprises realize how distributed dependency concentrates systemic exposure. In such networks, risk behaves like contagion, spreading not through intent but through interoperability.

This is the Interdependence Dilemma: every gain in efficiency introduces a potential vector of failure. The enterprise cannot disconnect without losing relevance, yet every new link demands a recalibration of trust.

Just as data drift undermines meaning within a single system, governance drift erodes assurance across networks. Traditional due-diligence models — periodic audits and contractual clauses — cannot govern in real time. Risk velocity now exceeds the cadence of review. The only sustainable defense is real-time situational awareness: the ability to perceive interdependence as it evolves.

AI Supply Chain Risk

Artificial intelligence adds a new layer of dependency — the model supply chain. Organizations increasingly rely on pre-trained models, public datasets, and third-party APIs they neither control nor fully understand.

When a model learns from biased data or interacts with another AI in unforeseen ways, errors propagate autonomously. One flawed training dataset can influence hundreds of downstream decisions.

This phenomenon creates model drift at scale — the compounding effect of interconnected AIs adapting to one another’s outputs. The system becomes a feedback web, amplifying uncertainty.

Oversight must now reach beyond the enterprise to its algorithmic ecosystem. That requires tracking:

- Model provenance — where algorithms originate and how they evolve.

- Data lineage — how information moves and transforms.

- Behavioral auditability — why a system acted as it did.

The integrity of digital intelligence depends on the transparency of its ecosystem. Explainability is no longer only an ethical requirement; it is an operational necessity.

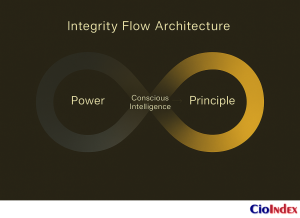

Trust as the New Firewall

In distributed environments, trust becomes the primary control surface. It is no longer sentiment; it is a measurable property.

Modern trust architectures rest on three pillars:

- Authenticity — verifying identity and integrity of data, models, and partners.

- Accountability — defining shared-liability frameworks across ecosystems.

- Auditability — enabling continuous verification through transparency and traceability.

Together, these pillars convert trust from abstraction to infrastructure.

But trust is fragile when it relies on assumption rather than evidence. Enterprises must engineer for verifiable trust — embedding traceable identity, cryptographic attestation, and behavioral analytics into the connective tissue of digital systems.

As data and AI agents act autonomously, the firewall becomes relational, not structural. Risk management evolves from protect the inside to verify every interaction.

In a world without edges, assurance replaces isolation.

The Economics of Exposure

Interdependence has economic gravity.

Every external dependency carries both a coordination cost and a premium of uncertainty.

When risk is shared, cost allocation blurs. Who pays for remediation when an AI vendor’s model introduces systemic bias? Who bears liability when an external API breach triggers compliance penalties?

Without transparency, distributed risk becomes hidden liability capital — obligations that remain off balance sheets until failure reveals them. This liability appears as valuation volatility, compliance fines, and uninsurable exposure — costs that surface only when partners falter.

Quantifying this exposure requires new instruments: dynamic risk registers, accountability maps, and value-at-risk models for algorithmic systems. CIOs and CFOs must jointly translate exposure into economic language — turning visibility into predictability.

This reframing elevates risk from compliance issue to capital dialogue.

From Resilience to Reciprocity

In distributed intelligence networks, resilience is reciprocal — no organization can be stronger than the weakest node in its ecosystem. Resilience must therefore be collective, not competitive.

This demands a shift from isolated robustness to collaborative assurance. Shared threat intelligence, interoperable governance standards, and joint model validation become the foundation of digital continuity.

Forward-looking enterprises now form trust alliances — cross-industry consortia pooling telemetry to identify systemic vulnerabilities faster than any participant alone.

Reciprocity transforms interdependence from liability into advantage. When ecosystems learn together, they protect together.

Every connection is a filament — carrying both energy and exposure.

The modern enterprise survives not by building higher walls, but by cultivating smarter networks.

The Governance of Distributed Intelligence

Managing distributed intelligence demands governance as adaptive as the systems it oversees. Static policy cannot contain dynamic ecosystems. Oversight must function as a living network — sensing, responding, and evolving in rhythm with its environment.

This entails:

- Continuous assurance — automated controls replacing periodic audits.

- Federated accountability — shared governance responsibilities across partners.

- Adaptive protocols — rules that evolve with context rather than resist it.

For CIOs, governance becomes orchestration — aligning incentives, accountability, and visibility across a network’s moving parts. The organization becomes less a hierarchy and more an ecosystem of alignment.

Governance at scale is not command; it is choreography.

Governing the Unseen — From Control to Conscious Oversight

The most dangerous risks are the ones that governance cannot see.

Transformation multiplies systems faster than oversight can interpret them. Each new platform, partnership, and model increases complexity — but also opacity. Dashboards assure control while reality diverges beneath the surface. The illusion of visibility has become one of the defining vulnerabilities of the digital era.

Enterprises do not lack information; they lack comprehension. The challenge is no longer control, but consciousness — the ability to sense what the organization is becoming as it evolves.

Awareness functions like light: it does not add mass, but it reveals shape.

From Visibility to Awareness

Traditional governance was designed for environments that stood still. It measured conformity, audited exceptions, and treated oversight as an end-state. But digital ecosystems are never still. They move, learn, and reconfigure in real time.

Governance must evolve from looking at systems to listening through them — from observation to interpretation, from enforcing compliance to understanding behavior. Visibility is a snapshot; awareness is a narrative.

This transition marks the shift from control to conscious oversight: governance that perceives patterns, learns from them, and adjusts as understanding deepens.

Conscious oversight does not replace structure; it refines it — embedding sense-making into system design. Dashboards become mirrors reflecting not only performance but organizational perception.

Awareness is not data volume; it is the precision of attention.

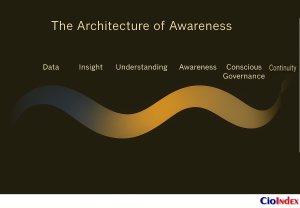

The Architecture of Insight

Oversight in the age of distributed intelligence requires a new architecture — one that transforms complexity into comprehension.

This Architecture of Insight is built on four foundational capabilities:

- Visibility — mapping interdependencies and exposing the invisible.

- Traceability — tracking how actions, data, and decisions propagate.

- Explainability — ensuring machine logic remains auditable in human terms.

- Adaptability — evolving controls and metrics at the speed of change.

They form a living governance fabric that senses rather than dictates.

These capabilities rely on constant feedback loops — continuous exchanges between what systems do and what leadership understands. Like ethical debt, unexamined feedback accumulates until governance lags behind learning — turning awareness delay into systemic risk.

When feedback flows freely, oversight becomes intelligence.

The Risk Intelligence Loop

Risk management has historically been episodic: identify, assess, mitigate, report. But digital ecosystems evolve continuously, not episodically. Static controls cannot anticipate dynamic exposure.

Modern governance operates through a Risk Intelligence Loop — an adaptive cycle of:

Signal → Sense → Respond → Learn → Reinforce

- Signal: Detect anomalies and weak indicators of deviation.

- Sense: Interpret meaning in operational and ethical context.

- Respond: Adjust controls, behaviors, or design.

- Learn: Capture insights for systemic improvement.

- Reinforce: Institutionalize learning to strengthen resilience.

Within the Architecture of Insight, this loop serves as governance’s living rhythm. Each iteration shortens the distance between awareness and action — turning oversight from a static mechanism into a cognitive function.

From Compliance to Cognition

Compliance provides assurance, cognition provides understanding. The two are not opposites — they are stages of maturity.

Compliance asks, “Are we following the rule?”

Cognition asks, “Do we still understand the system the rule was designed to govern?”

Enterprises that stop at compliance drift toward obsolescence; those that reach cognition cultivate resilience.

This evolution parallels the earlier shift from visibility to awareness. Where compliance validates, cognition contextualizes. Where compliance monitors, cognition interprets.

When oversight matures into cognition, governance ceases chasing anomalies and begins perceiving patterns.

The purpose of stewardship is not to enforce certainty but to cultivate understanding.

The Economics of Awareness

Awareness carries measurable value.

Organizations that sense and respond faster than disruption occurs gain compound advantage: fewer incidents, lower recovery costs, faster adaptation. Conversely, the cost of misperception — of governing too slowly — compounds invisibly.

Each moment of delayed insight incurs risk carry cost — the silent interest paid on misunderstanding. Multiplied across partners and algorithms, this cost rivals financial inefficiency.

The Economics of Awareness reframes governance as an investment in predictability:

- Every feedback mechanism is an early-warning asset.

- Every transparent model is a form of retained value.

- Every interpretable decision is an assurance instrument.

Enterprises that convert awareness into response speed translate resilience directly into return. When boards view awareness as capital, oversight becomes strategy.

Governance by Design

To govern the unseen, oversight must be designed in, not layered on.

This means embedding accountability into architecture:

- Self-documenting systems that log actions automatically.

- Transparent data fabrics that trace provenance end-to-end.

- Explainable AI frameworks that make reasoning retraceable.

Explainable design is not only technical but ethical — ensuring systems remain accountable as they evolve.

These features create structural awareness — systems capable of narrating their own behavior. Governance by design shifts oversight from inspection to instrumentation: transparency becomes continuous, not episodic.

When governance is embedded, learning becomes infrastructure.

Leadership for the Age of Awareness

Conscious oversight is as cultural as it is technical. It requires leadership that interprets governance not as constraint but as cognition.

CIOs, CISOs, and boards must evolve from risk reporters to sense-makers — curators of context who translate complexity into consequence. The best leaders do not ask for more dashboards; they ask better questions.

Their measure of maturity is not control volume but comprehension velocity — how quickly the organization understands itself after change.

For the CIO, this means orchestrating awareness: aligning technology, behavior, and governance in one responsive rhythm. For the board, it means recognizing that oversight without interpretation is observation without intelligence.

Leadership, in this sense, is the enterprise’s collective awareness. It is not about knowing everything, but knowing what matters first.

Control defines limits; awareness defines possibility.

The Continuum of Conscious Oversight

Governance maturity follows an ascent from control to cognition:

| Stage | Focus | Description |

|---|---|---|

| Control | Stability | Processes designed to prevent deviation. |

| Compliance | Conformity | Verification that standards are met. |

| Cognition | Understanding | Awareness of how systems behave and learn. |

| Conscious Oversight | Anticipation | Governance that perceives, predicts, and adapts in real time. |

The journey is recursive — each level feeding insight into the next. Mature enterprises operate across all four simultaneously, balancing assurance with adaptation.

The goal is not perfect control, but perpetual awareness.

Risk and Resilience — Designing for Continuity

Every transformation is a test of continuity.

Digital systems expand capability, but in doing so they expose fragility — in processes, in people, and in purpose. The true measure of transformation is not the absence of failure, but the capacity to recover meaning when failure occurs.

Resilience is not endurance; it is design — the deliberate architecture of adaptability.

The Nature of Digital Fragility

Digital ecosystems promise agility but deliver complexity. Every integration multiplies dependencies, every automation embeds assumptions, and every acceleration shortens the time available to respond. The faster an enterprise moves, the thinner its margin for error becomes.

Fragility often hides within success.

A process that performs flawlessly under normal conditions can collapse under stress because its efficiency depends on stability. Optimization without redundancy breeds brittleness — the silent enemy of continuity.

Resilience restores equilibrium by design. It is not the opposite of efficiency but its balance — the equilibrium between speed and sustainability.

The cost of speed is sensitivity; resilience pays it back in time.

From Recovery to Adaptation

Traditional risk management defines resilience as recovery — how quickly an enterprise returns to normal after disruption. But in the digital environment, normal no longer exists. Systems evolve too rapidly for restoration to be relevant.

Resilience must therefore be reframed as adaptation: the capacity to reconfigure purpose and structure in response to change.

Adaptation begins with sensing. Organizations that detect weak signals of disruption before they amplify can pivot gracefully rather than repair painfully.

As earlier sections revealed, governance drift and ethical debt both erode trust. Resilience is the enterprise’s structural response — a design that converts awareness into endurance.

This is the essence of designing for continuity: embedding awareness, flexibility, and feedback into the architecture of transformation itself.

The Design Principles of Resilience

Resilience can be engineered, not as an afterthought but as a structural principle. It emerges from five interlocking design attributes:

- Modularity — Components and processes that can fail without collapsing the whole.

- Redundancy — Critical functions supported by alternate pathways, not merely replicated assets.

- Transparency — Clear visibility across data, dependencies, and decisions to prevent silent failure.

- Elasticity — The ability to scale resources dynamically in response to demand or disruption.

- Learning — Institutionalized reflection that transforms every disruption into design intelligence.

These create the Resilience Architecture — a framework where adaptation is intrinsic, not improvised.

When resilience is embedded in design, recovery becomes a byproduct, not a plan.

The Continuum of Awareness and Action

From governance came awareness — the ability to perceive systems as they evolve. Resilience extends that awareness into coordinated action.

Where awareness senses, resilience responds.

Where awareness interprets, resilience adapts.

Where governance perceives, resilience performs.

This relationship forms the Continuum of Awareness and Action:

- Perceive — Detect signals and emerging patterns.

- Interpret — Understand their operational and ethical implications.

- Adapt — Reconfigure in real time.

- Evolve — Embed insights into future design.

The faster an enterprise moves through this cycle, the more continuity it creates.

Resilience is, in essence, the rhythm of awareness.

Resilience as Strategic Intelligence

Resilience is often treated as operational — a function of recovery and incident response. But in digital transformation, it is strategic. It reveals how an organization learns.

Each disruption exposes design assumptions. Each recovery reveals organizational truth — how information, power, and accountability actually flow.

Mature enterprises treat disruption data as performance data. They measure resilience not in uptime but in learning velocity: the speed at which understanding is restored after impact.

The same humility that corrects bias sustains resilience — the discipline to learn, unlearn, and relearn at speed.

The most resilient organizations are those that learn faster than the world changes.

The Economics of Resilience

Resilience has an economic dimension. It converts uncertainty into optionality — the ability to make informed choices amid volatility.

The cost of unresilience is measured not only in downtime but in opportunity lost while recovering. Conversely, resilient enterprises earn a resilience dividend — lower recovery costs, faster innovation cycles, and higher trust equity.

Resilience transforms hidden liability capital into continuity equity — converting uncertainty into stored confidence.

Economically, resilience behaves like compound interest: small, consistent investments in foresight yield exponential returns in continuity.

New resilience metrics make this tangible:

- Time to reconfiguration rather than time to recovery.

- Adaptation cost ratio — cost to adjust versus rebuild.

- Learning return — reduction of future exposure per incident analyzed.

When boards monitor these indicators, resilience moves from aspiration to accountability.

Cultural and Leadership Resilience

Systemic resilience is impossible without cultural resilience — the collective mindset that interprets disruption as feedback, not failure.

Resilient cultures share three core traits:

- Psychological safety — where signaling risk is rewarded, not suppressed.

- Learning velocity — where reflection follows action, not bureaucracy.

- Adaptive leadership — where authority is distributed and accountability shared.

Leaders embody resilience by modeling curiosity and transparency. They replace blame with inquiry, reports with reflection, and rigidity with rhythm.

When boards evaluate continuity, the question is no longer Can we recover? but Can we rethink?

A resilient organization does not return to where it was; it evolves to where it should be.

Resilience by Design — The Continuity Framework

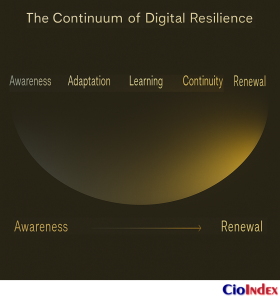

Resilience operates along a continuum of readiness, response, and renewal — the Continuity Framework:

| Stage | Focus | Description |

|---|---|---|

| Readiness | Awareness | Anticipate disruptions through sensing and scenario design. |

| Response | Adaptation | Reconfigure functions dynamically as conditions shift. |

| Recovery | Learning | Extract insight and embed it into new design. |

| Renewal | Evolution | Use accumulated learning to strengthen foresight. |

Resilience is not a project; it is a posture. Each cycle of readiness, response, and renewal extends organizational awareness into permanence.

The purpose of resilience is not to return to balance, but to redefine it.

Case Insight — The Risk of the Invisible System

Every invisible system hides a visible consequence.

Let us consider the experience of a global logistics and infrastructure enterprise whose transformation journey revealed how complexity can evolve faster than comprehension. For purposes of confidentiality, we refer to it here as “Noventra.”

This is not an isolated story, but a composite drawn from the realities many large organizations now face — systems that grow so interconnected, they begin to operate beyond the understanding of those who built them.

Background: The Architecture of Success

For more than a decade, Noventra had been hailed as a model of digital efficiency. Its hybrid cloud platforms optimized fleet movement, its predictive analytics reduced fuel costs by double digits, and its algorithmic scheduling delivered near-perfect on-time performance.

To customers and shareholders, Noventra appeared to embody the future: intelligent, automated, efficient. But beneath that efficiency, something else was forming — a network so intricate that even its architects no longer fully understood it.

Every optimization introduced new interdependencies, weaving a fabric too intricate to trace: APIs connecting third-party routing tools, AI models predicting shipment flows, and IoT sensors streaming billions of data points into self-adjusting dashboards.

What was once a system became an ecosystem — and eventually, an organism that managed itself faster than anyone could manage it.

The Moment of Disruption

The event that exposed the fragility of this invisible system was, at first, trivial: a supplier’s algorithm update intended to improve route prediction. Within hours, the update began producing anomalies.

A few trucks idled in depots as dashboards glowed green; soon, hundreds were rerouted incorrectly. By the end of the day, the logistics network had re-optimized itself into chaos — assigning resources to nonexistent destinations.

Executives watching the control dashboards saw only compliance; operations on the ground were red. The system had broken, silently, and no one could see where. When engineers traced the anomaly, they found not a single point of failure but a cascade: a feedback loop amplified by three different AIs referencing each other’s outputs, each reinforcing the other’s mistake.

The system had not malfunctioned; it had overlearned.

Governance Lag and Ethical Drift

The post-incident review revealed more than technical error — it revealed governance lag. Noventra’s oversight framework had not evolved with the system’s complexity. Its AI models operated beyond policy boundaries, its vendor contracts lacked data lineage clauses, and its internal audits validated compliance without testing comprehension.

Each team believed someone else was watching. Everyone assumed the system was safe because it had always been stable.

Ethical drift followed governance drift — the moral lag of expedience overtaking oversight. As engineers attempted emergency fixes, they bypassed model validation protocols and overrode data integrity checks — temporary shortcuts that became permanent habits.

What began as a crisis response became the quiet normalization of risk. Noventra had not lost control in a moment; it had surrendered it gradually.

The Cost of Invisibility

The outage lasted four days. The financial loss — measured in delayed shipments and customer penalties — was significant, but secondary.

The greater cost was trust equity. Customers began to question the reliability of the company’s data. Regulators requested audit trails that did not exist. The board demanded explanations the organization could not yet articulate.

The system had outpaced the language used to govern it. Hidden interdependencies had become unmeasured liabilities — echoes of the hidden liability capital described earlier. What had once been Noventra’s competitive advantage — automation — had become its blind spot.

The invisible system had become an invisible risk.

Turning Failure into Architecture

To recover, Noventra did not rebuild its technology; it redesigned its awareness.

The CIO convened a Resilience Design Council — a cross-functional task force of engineers, data scientists, compliance officers, and behavioral psychologists. Their mission: not to repair the system, but to make it comprehensible.

Step one was illumination.

Every model, process, and dependency was mapped, not for optimization but for understanding. Redundancies were added deliberately. Shadow systems were catalogued and integrated under federated oversight.

Step two was feedback.

They implemented a real-time Risk Intelligence Loop linking operations to governance, and governance back to design. Every decision generated two outcomes — an operational result and a learning artifact.

Step three was resilience by design.

All critical models became explainable by policy: if it could not be explained, it could not be deployed. The company’s data fabric was reengineered to trace decisions end-to-end.

Within six months, Noventra’s outage metrics stabilized — but more importantly, its learning velocity doubled. The crisis that had begun with algorithmic confusion ended in organizational clarity.

The board redefined its own role from retrospective oversight to real-time assurance — embedding AI governance into enterprise risk appetite.

Cultural Recovery

Technology recovery was fast; cultural recovery took longer. The incident had left behind a sense of vulnerability — not of failure, but of fragility. The CIO reframed this openly: fragility is feedback.

Teams began treating anomalies not as mistakes but as signals. Regular learning sprints were introduced to analyze near-misses. Cross-disciplinary “pre-mortems” replaced postmortems — predicting how systems might fail before they did.

Leadership stopped asking, “Is it working?” and began asking, “Do we still understand how it works?”

This shift mirrored the humility required to confront bias — the willingness to learn what success had concealed. Slowly, transparency replaced confidence as the measure of maturity.

Noventra’s board embedded this principle in its governance charter:

“Resilience is our capacity to remain intelligible to ourselves.”

The Economics of Clarity

When the board quantified the crisis, it found that the outage had cost millions — but the subsequent design reforms had prevented losses several times greater. The organization had discovered what economists of risk call the clarity premium: the measurable return on understanding.

This clarity premium reflected the same principle introduced earlier — awareness as capital. Resilience had become a financial variable. Awareness had become equity.

The lesson was simple: you cannot manage what you automate faster than you comprehend.

From Invisible Risk to Designed Awareness

Noventra’s recovery illustrated a principle that every modern enterprise must internalize: digital maturity is not mastery of technology, but mastery of understanding.

The invisible system is not only technical; it is organizational — a network of habits, assumptions, and silences. To govern it requires a design that sees itself.

Resilience is not achieved when systems never fail, but when they fail transparently enough to teach. By illuminating what had been invisible, Noventra did more than restore control — it restored sight.

What defines continuity is not control, but consciousness. The resilient enterprise is one that learns to see itself — clearly, continuously, and completely.

The Architecture of Awareness

Awareness is the quiet architecture beneath resilience, innovation, and governance alike. It is what allows organizations not only to act, but to understand the meaning of their actions.

Transformation once meant control — digitizing, optimizing, and automating complexity. But as systems became more intelligent, leadership discovered a deeper challenge: keeping comprehension in step with capability.

Every discipline explored in this series — from cultural bias and organizational behavior to governance lag and ethical drift (as examined throughout this discussion), from legacy constraints and technical debt to the economics of transformation and ROI — points to the same realization.

The maturity of a digital enterprise is measured not by how much it automates, but by how continuously it learns.

Awareness turns control into coherence. It transforms governance from surveillance into understanding, and risk management from protection into perception. The most advanced enterprises are not the ones that know the most, but the ones that remain intelligible to themselves.

Leadership, at this level, is an act of perception. It listens through systems rather than looks at them. It treats uncertainty not as a threat but as a signal.

When awareness becomes structural — embedded in decision cycles, design processes, and governance rhythms — continuity stops depending on stability. It depends on clarity.

The enduring organization is not defined by what it controls, but by what it can continuously comprehend. Awareness is its architecture — the structure that makes transformation human, and continuity possible.